Where intelligence is permitted to act — and required to stop.

The purpose of AI and automation is not to remove the human — but to return them to the center.

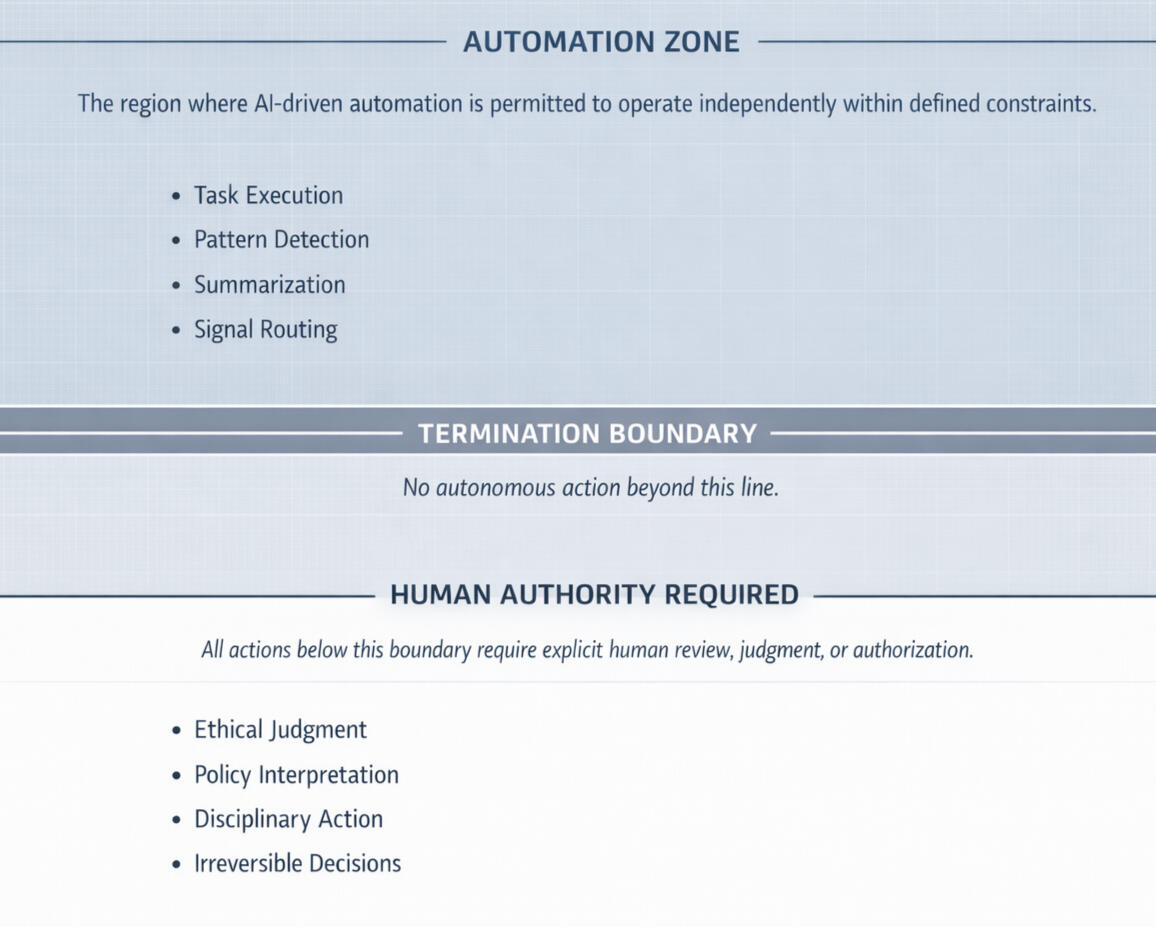

Hard Stops

Automation Termination Boundaries

This section defines the non-negotiable limits beyond which automation and AI systems are structurally incapable of acting.What This GovernsAutomation Termination Boundaries establish the hard stop lines for intelligent systems. They govern:

- Which actions automation is explicitly forbidden from taking

- The precise conditions under which autonomous execution must halt immediately

- The domains where confidence, optimization, or pattern recognition are irrelevant

- The architectural separation between assistance and authority

- These boundaries are enforced by system design — not policy, preference, or discretion.Why This ExistsInstitutions are not afraid of AI doing too little.

They are afraid of it doing one thing too much.Most failures in intelligent systems do not occur because automation was inaccurate — they occur because it was unchecked. When stopping conditions are vague, authority becomes blurred. When authority is blurred, accountability collapses.This section exists to remove ambiguity entirely.Automation Termination Boundaries prove that autonomy is conditional, limited, and revocable by design. They demonstrate that restraint is not an afterthought — it is foundational.What This GuaranteesWith Automation Termination Boundaries in place, organizations can rely on the following guarantees:

- Automation cannot proceed beyond defined limits, regardless of confidence

- No autonomous action can cross into protected human judgment domains

- There are no “edge cases” where the system decides to continue

- All escalation beyond this boundary requires explicit human authority

- This is the difference between trusted automation and runaway optimization.Structural PrincipleAutomation does not earn permission by being accurate.

It is permitted to act only where it is explicitly allowed — and nowhere else.Termination is not failure.

Termination is governance.

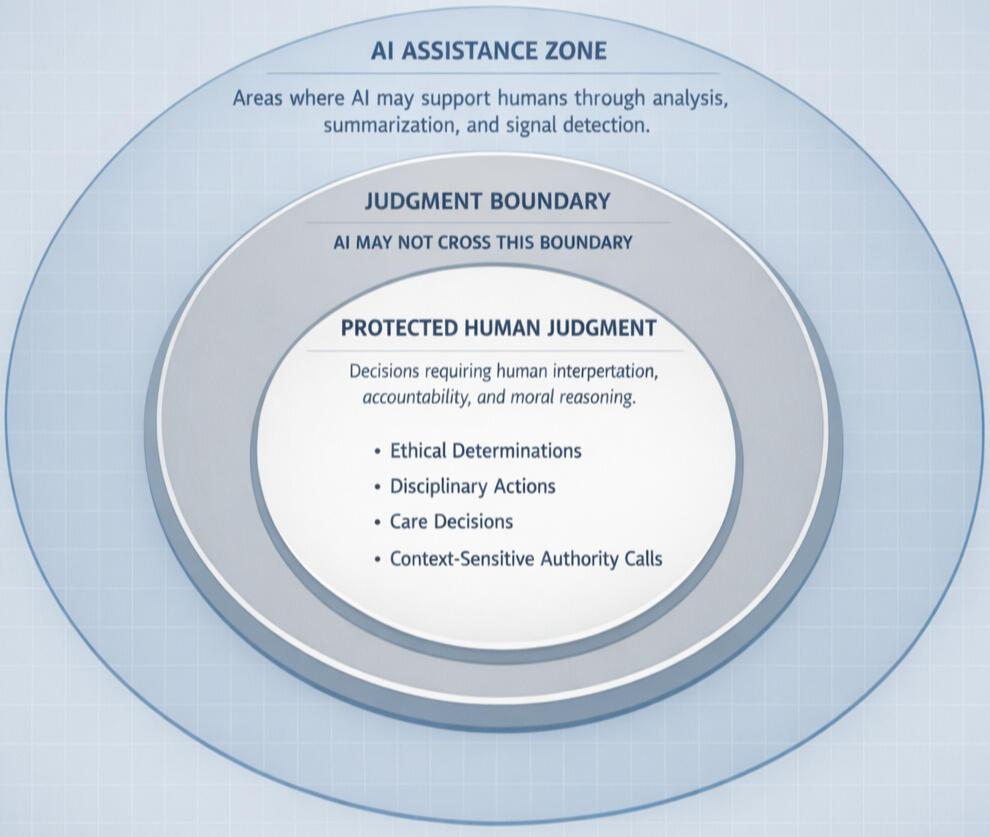

SACRED DECISIONS

Protected Human Judgments

This section defines the decisions that intelligent systems are explicitly forbidden from making, optimizing, or finalizing.What This GovernsProtected Human Judgments establish human-only decision domains within an intelligent system. These are areas where automation may support, but is never permitted to decide.This includes decisions that require:

• moral reasoning

• contextual discretion

• empathy and situational awareness

• accountable human authority

Examples of protected domains include:

• disciplinary determinations

• care and well-being decisions

• context-sensitive leadership judgment

• ethical interpretations of policy

• outcomes with irreversible human impactIn these domains, intelligence may prepare information — but authority never transfers.Why It ExistsOptimization without restraint erodes trust.

Many systems fail not because they are inaccurate, but because they attempt to flatten human complexity into metrics. When judgment is reduced to confidence scores or efficiency thresholds, people feel surveilled, dehumanized, or overridden.This section exists to make a different claim:

Some decisions are protected because they are human.

Not despite it.Protected Human Judgments ensure that technology never replaces the very reasoning it is meant to support.This is trauma-informed design at a structural level — not as a value statement, but as architecture.What This GuaranteesThis doctrine guarantees that:

• No disciplinary, ethical, or care-related outcome is ever decided by automation

• Human discretion cannot be bypassed by confidence, urgency, or pattern recognition

• Efficiency never outranks dignity

• Empathy is not treated as a system defect

The system is intentionally incapable of finalizing these decisions.Not paused.

Not rate-limited.

Not discouraged.

Incapable — by design.

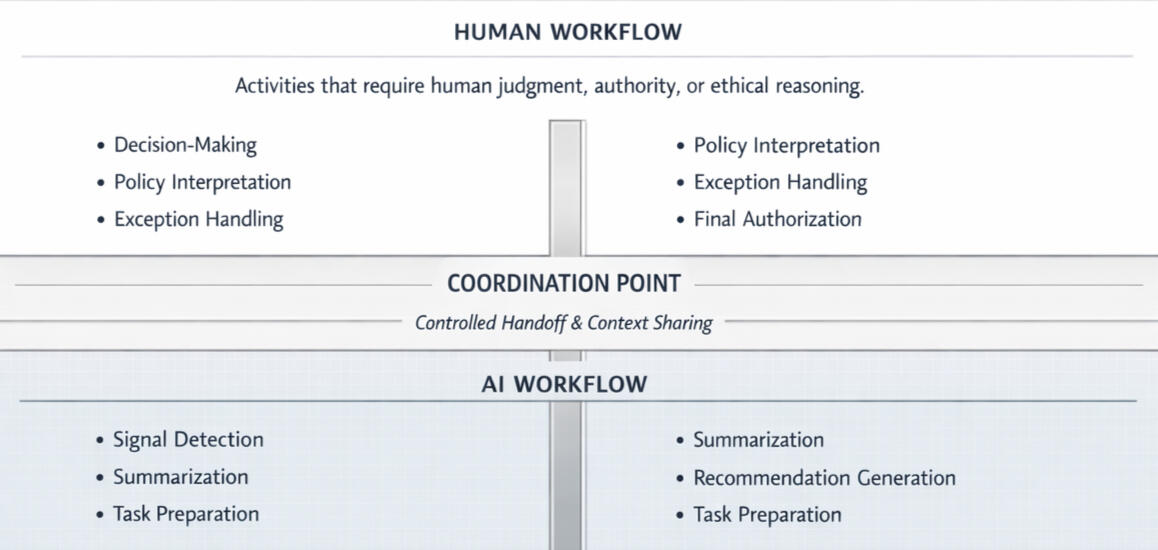

Clear Handoffs

Human–AI Coordination Rules

This section defines how humans and intelligence systems work together without overlap, surprise, or authority confusion.What This GovernsHuman–AI Coordination Rules establish explicit interaction contracts between people and intelligent systems.They define:

• Who acts first

• Who verifies

• Who escalates

• Who owns the final outcome

This doctrine governs coordination, not capability.It does not ask what the system can do — it defines how responsibility is shared without collision.Why This ExistsMost system failures do not come from bad intelligence.

They come from unclear coordination.When humans and systems operate in parallel without rules:

• Humans assume the system handled it

• Systems assume a human will intervene

• Responsibility disappears into the gap

This is how “shadow IT,” alert fatigue, duplicated effort, and silent failures are born.This section exists to eliminate ambiguity before it can cause harm.Core PrinciplesHuman–AI coordination at CortexForge is governed by four non-negotiable principles:1. No Overlap

Humans and AI never perform the same responsibility simultaneously.

Every task has a single owner at any given moment.2. Explicit Handoffs

Authority transfer is intentional, visible, and logged.

The system never assumes permission.3. Predictable Behavior

The system behaves consistently under stress.

Humans are never surprised by automated actions.4. Outcome Ownership

Every outcome has a human owner — even when automation executes the task.What the System May DoWithin coordination rules, intelligence systems may:

• Detect signals

• Summarize information

• Prepare tasks

• Recommend actions

• Route workflows

These actions prepare decisions — they do not replace them unless explicitly permitted elsewhere in Doctrine.What the System May Not DoWithout human coordination approval, the system may not:

• Override human judgment

• Execute irreversible actions

• Change authority scope

• Reassign responsibility

• Continue when escalation is triggered

The system is designed to pause gracefully, not push forward.What This GuaranteesThis doctrine guarantees that:

• Humans never fight the system

• The system never surprises humans

• Responsibility never falls between roles

• Accountability is always traceableCoordination is calm, predictable, and deliberate — even in high-pressure environments.

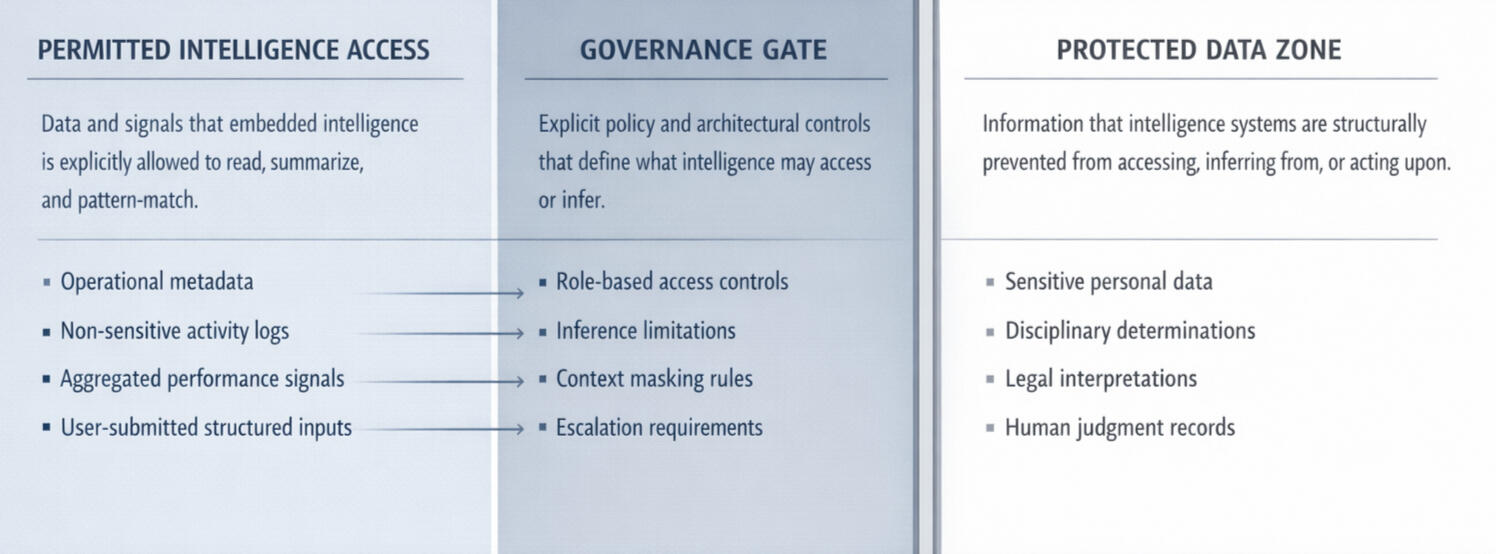

Governed Insight

Governed Intelligence Boundaries

This section defines what intelligence systems are allowed to see, infer, and combine — and what is structurally prohibited, regardless of capability.What This GovernsGoverned Intelligence Boundaries establish explicit architectural limits on intelligence behavior.They define:

• Which data domains intelligence layers may access

• What types of inference are permitted or prohibited

• Which datasets may never be cross-referenced

• How visibility, inference, and action are separated by designThis is not a policy document.

These boundaries are enforced at the system architecture level.

Intelligence does not “request” access.

It is either structurally allowed — or it cannot see the data at all.Why This ExistsMost intelligence systems fail governance audits without malicious intent.

The failure mode is almost always the same: unchecked inference.When systems are allowed to freely combine datasets, they begin to surface insights that were never explicitly authorized — even if each individual dataset was permissible on its own.

Institutions are not afraid of intelligence.

They are afraid of what intelligence might infer without consent.This section exists to ensure:

• Insight never outruns authorization

• Correlation does not become surveillance

• Capability does not silently expand over time

Governance must be architectural, not reactive.What This GuaranteesGoverned Intelligence Boundaries guarantee that:

• Intelligence visibility is explicitly scoped

• Inference pathways are intentionally constrained

• Sensitive domains remain structurally isolated

• “Just because it could infer something” does not mean it is allowed toThis creates systems that are:

• compliant by construction

• auditable by design

• trusted by leadership

• defensible under regulatory scrutinyThe system cannot surprise its owners — because it cannot see what it is not permitted to know.

Nothing Untraceable

AUDITABILITY & ACCOUNTABILITY CHAINS

This section defines how every action, recommendation, and decision within an intelligent system is permanently traceable to a human authority, a system event, and a moment in time.Auditability is not a reporting feature.

It is a structural guarantee.What This GovernsAuditability & Accountability Chains govern:

• How actions are initiated, reviewed, approved, modified, or stopped

• How AI recommendations are recorded alongside human decisions

• How responsibility is preserved across automated and human workflows

• How outcomes remain explainable long after executionThis doctrine applies to every system action, including:

• automated classifications

• summaries and recommendations

• escalations and approvals

• workflow executions

• policy actions and remediationsNothing occurs outside the chain.Why This ExistsInstitutions do not lose trust because mistakes happen.

They lose trust because no one can explain what happened.Most automation failures are not technical failures — they are memory failures:

• decisions without authors

• actions without context

• systems that cannot answer “why”This section exists to eliminate that failure mode entirely.

Not by policy.

By architecture.Core Principle

If an action cannot be explained, it cannot exist.Every outcome must be:

• attributable

• reviewable

• defensible

At any point — days, months, or years later.What This GuaranteesThis doctrine guarantees that:

• No anonymous actions occurEvery action has an initiating signal, a responsible actor, and a recorded outcome.

• No invisible decisions exist

AI recommendations are logged alongside the human response — approval, rejection, or modification.

• No authority is implied

Authority is explicitly exercised, recorded, and preserved.

• No automation escapes review

Even fully permitted automation remains auditable after execution.This creates systems that can say, without hesitation:

“We can explain every outcome.”Structural ComponentsAuditability & Accountability Chains are enforced through:• Event Logs

Timestamped records of system signals, inputs, and triggers.• Decision Context Capture

The state of information presented at the moment a decision was made.• Actor Identity Binding

Clear linkage between actions and the human or system entity responsible.• Outcome Recording

What occurred, what was intended, and what followed.• Immutable History

Records that persist regardless of personnel changes or system evolution.This is not surveillance.

It is institutional continuity.Relationship to Human Authority

Auditability does not reduce human power.It protects it.

By preserving context and intent, leaders are not judged by outcomes alone — but by the information and constraints present at the time of action.This doctrine ensures accountability without blame, and authority without ambiguity.